Buried deep within Houston’s underbelly, a forgotten structure lies, once the lifeblood of a city notorious for its scorching heat. Built in 1926, the Cistern at Buffalo Bayou Park provided water for hundreds of thousands of people crazy enough to live in Houston. Air-conditioners were a rare luxury that only a few could afford.

For 80 years, the reservoir stored millions of gallons. Countless Houstonians drank their first and last glass of water from it. In 2007, the city had outgrew its capacity, draining its water and slamming the hatch shut. After a decade, it opened as a tourist attraction.

Entering the gigantic structure gives what I can only describe as a glimpse into a brutalist fractal. Enormous columns create geometric reflections. The remaining foot deep layer of water creates an effect of infinity. Staring into the abyss, it reminds me of the current state of large language models.

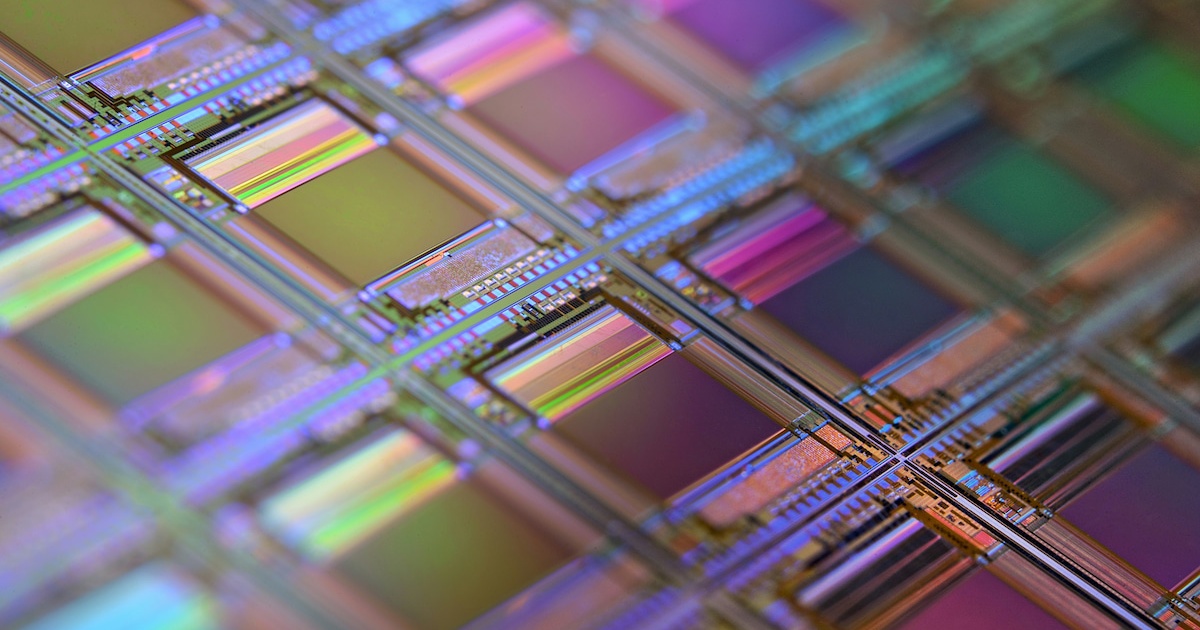

Similar to the cistern, large language models are a reservoir for data. Recently, a 30-year-old algorithm had the dust shaken off of it and brought back to life. Surprising results occurred when running it on large data sets. Within the same year, the reservoir was open for tours.

In 2017 a group of researchers released the paper "Attention is All You Need". The team which later became Google Brain used Transformer Architecture to train on large text datasets. Soon after OpenAI had a breakthrough with Generative Pre-trained Transformer later making “GPT” a household name.

Just like a person trying to survive the humid heat of Texas, artificial intelligence startups developed an unquenchable thirst for data. Companies created larger and larger reservoirs by convincing investors that with enough data, CPUs and power, artificial general intelligence (AGI) could drink its first glass of water.

Brute forcing large language models alone will have a plateau. AI startups going 100mph will soon hit a cement wall with the current architecture. Claiming that the current state of machine learning is actually AGI is the equivalent of proclaiming the reservoir, not only held water but creates it.

AI, in its current form, is a mirror held up to humanity. Returning our own words back from every prompt, making humans a key component to the software. Human consciousness is not linear and the current machine learning algorithms have a bottleneck issue.

Predicting the most likely next word based on existing text data is far from self-aware. It only tells us what we already know. AI startups are plagiarizing the work of humanity on a scale as large as the datasets it’s based on. As automation replaces jobs, it raises the question do these companies hold a financial obligation to civilization? This question is as complex as whether water is a fundamental right.

We can make the reservoirs larger, but it will eventually provide only diminished returns. To achieve true artificial general intelligence, advancements in hardware and programming need to be achieved.

The brain has over 86 billion neurons, giving it the equivalent storage capacity of around 2.5 petabytes (2.5 PB = 2,500 TB = 2,500,000 GB). A mere fraction, around 10-20%, is dedicated to memory-related functions. Most of the neurons are used for processing.

Brain Storage Capacity Estimate:

Total storage: 2.5 PB =

2,500,000 GB Memory allocation: 10–20% of total

Lower bound: 2,500,000 GB × 0.10 = 250,000 GB

Upper bound: 2,500,000 GB × 0.20 = 500,000 GBAchieving true AGI will require progress beyond datasets, encompassing advancements in both hardware and real-time data processing. Quantum computing in its earliest stages shows encouraging signs of what hardware could achieve.

This brings me back to a larger question. We should not be asking if it is possible to create artificial general intelligence, but should we? Water can bring life to the thirsty, but it can also drown an entire city as a hurricane. While we wait for the storm, humanity stares into the cistern’s abyss.